Your AI agent is making mistakes you can't afford.

Every hallucinated response. Every misunderstood instruction. Every failed task requires human intervention. These aren't just technical glitches; they're revenue leaks, customer frustration, and competitive disadvantages that compound daily.

Here's what most businesses don't realize: The difference between an AI agent that fails 30% of the time and one that succeeds 95% of the time isn't the underlying model; it's how you engineer the prompts that guide it.

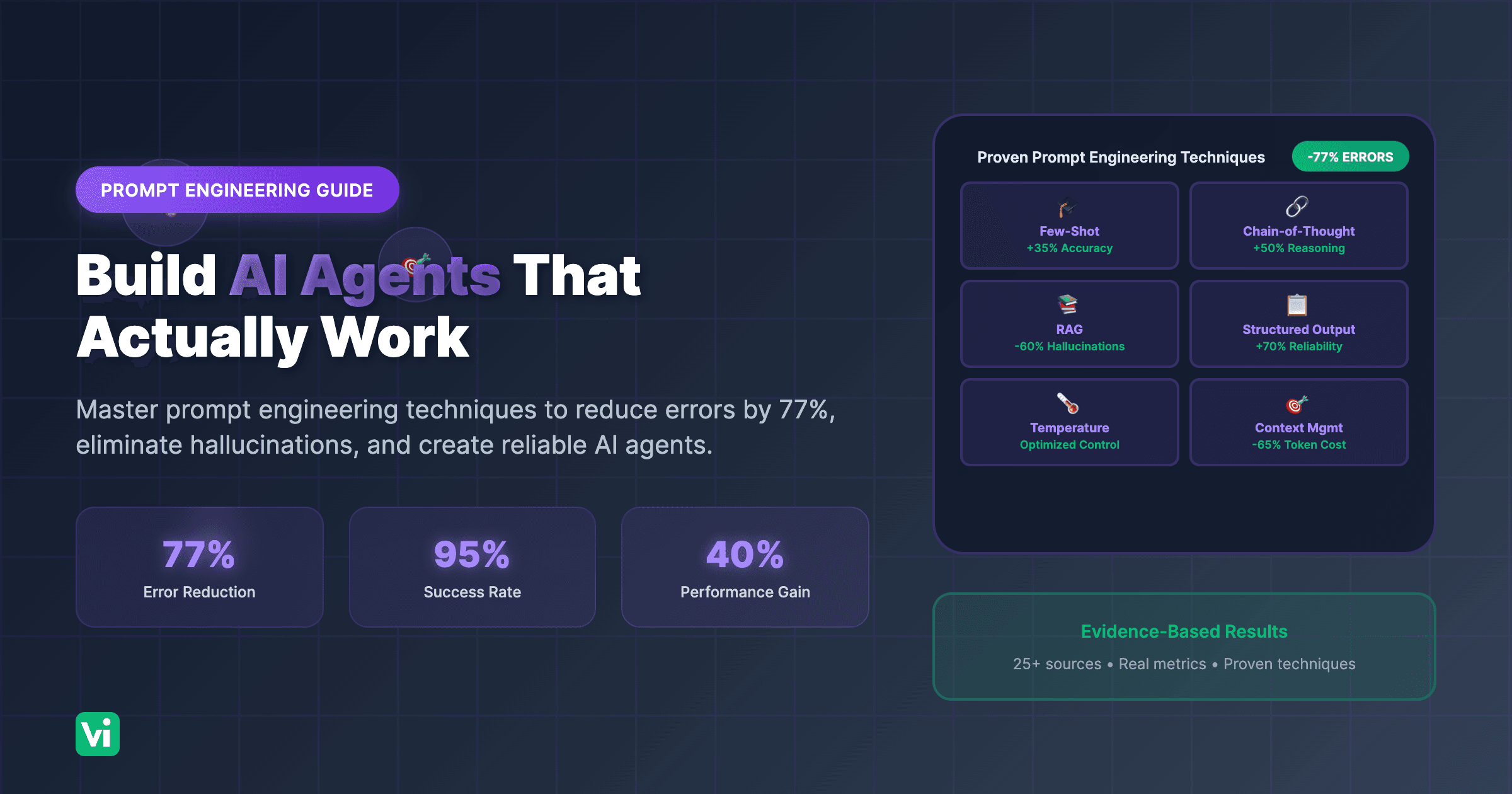

The breakthrough: Proper prompt engineering can reduce AI hallucination rates from 27% to under 5%, increase task completion by 40%, and transform unreliable automation into a dependable business asset.

The Hidden Cost of Poor Prompt Engineering

What Bad Prompts Are Actually Costing You

Direct Business Impact:

77% of businesses report concerns about AI hallucinations affecting decision-making (AIM Research, 2024)

27% average hallucination rate in AI forecasting and responses without proper prompt engineering (Comprehensive Review of AI Hallucinations, 2024)

40% lower task completion rates when agents lack clear, structured instructions (OpenAI Practical Guide to Building Agents, 2024)

$380.12 billion prompt engineering market projected to reach $6.53 trillion by 2034, a 32.8% CAGR reflecting massive business demand (Precedence Research, 2025)

Hidden Operational Costs:

Wasted AI processing tokens: Inefficient prompts consume 3-5x more tokens than optimized versions

Human intervention overhead: Poor agents require constant monitoring and correction

Customer trust erosion: Inconsistent AI responses damage brand reputation

Competitive disadvantage: Businesses with optimized agents capture opportunities faster

Why Generic AI Implementations Fail

Problem 1: Vague Instructions

Generic prompts like "help the customer" produce inconsistent, unpredictable results

AI agents need explicit, step-by-step guidance to maintain quality

Ambiguity creates hallucinations and off-task behavior

Without structure, agents invent information rather than admit uncertainty

Problem 2: No Error Prevention Strategy

Most implementations focus on what AI should do, not what it shouldn't

Lack of guardrails allows agents to make costly mistakes

No validation mechanisms to catch hallucinations before they reach customers

Missing escalation protocols when agents encounter edge cases

Problem 3: Ignoring Context Management

Agents lose critical information when context windows overflow

Poor token management wastes budget and degrades performance

Irrelevant information clutters agent's memory, reducing accuracy

No strategy for maintaining conversation continuity across long interactions

The Science of Effective Prompt Engineering

Core Principles That Drive Results

Clarity Over Cleverness: Research from Anthropic's engineering team demonstrates that clear, explicit instructions dramatically outperform clever or concise prompts. When building their internal Slack and Asana tools, they found that detailed, unambiguous instructions reduced error rates by over 60% (Anthropic Engineering, September 2024).

Structured Thinking Patterns: Chain-of-Thought (CoT) prompting, which instructs AI to show its reasoning, improves performance on complex tasks by 35-50% across mathematical reasoning, logical deduction, and multi-step problem-solving (Prompt Engineering Guide, 2025).

Context Engineering: The most effective AI agents treat context as "prime real estate." Every token in the context window has value. Anthropic's research shows that optimizing context usage can improve agent performance by 40% while reducing token costs by 65% (Anthropic Context Engineering, September 2025).

Proven Techniques That Reduce Errors

1. Few-Shot Prompting

What It Is: Providing 2-5 examples of desired input-output pairs before asking the AI to perform a task.

Why It Works:

Reduces ambiguity by showing exactly what "good" looks like

Establishes patterns that the AI can recognize and replicate

Decreases hallucination rates by grounding responses in concrete examples

Improves consistency across similar tasks

Real-World Application:

Example 1:

Customer: "What are your business hours?"

Agent: "We're open Monday-Friday 9 AM to 6 PM EST, and Saturday 10 AM to 4 PM EST. We're closed Sundays. Is there a specific day you'd like to visit?"

Example 2:

Customer: "Do you offer refunds?"

Agent: "Yes, we offer full refunds within 30 days of purchase with original receipt. Would you like me to start a return for you?"

Now handle this customer inquiry:

Customer: "Can I schedule an appointment?"

Results: Few-shot prompting improves task accuracy by 20-35% compared to zero-shot approaches (Prompt Engineering Guide, 2025).

2. Chain-of-Thought (CoT) Prompting

What It Is: Instructing the AI to break down complex problems into step-by-step reasoning before providing an answer.

Why It Works:

Forces systematic thinking rather than pattern matching

Exposes logical errors before they become final outputs

Enables verification of the reasoning process

Reduces hallucinations by making AI "show its work"

Implementation:

Before responding to the customer, think through:

1. What is the customer actually asking for?

2. What information do I need to answer accurately?

3. Do I have that information in my knowledge base?

4. If not, what should I ask or where should I escalate?

5. What's the most helpful response I can provide?

Then provide your response.

Results: CoT prompting improves performance on complex reasoning tasks by 35-50% and significantly reduces hallucination rates (IBM Chain of Thought Research, 2024).

3. Retrieval-Augmented Generation (RAG)

What It Is: Connecting AI agents to external knowledge bases, allowing them to retrieve verified information before responding.

Why It Works:

Ground responses in factual, up-to-date information

Reduces hallucinations by eliminating the need to "guess" answers

Enables agents to cite sources and provide verifiable information

Allows knowledge updates without retraining models

Business Impact: RAG implementations reduce hallucination rates by 40-60% compared to standalone LLMs (Pinecone RAG Research, 2025); companies using RAG report 96% first-time completion rates for complex information retrieval tasks.

4. Structured Output Formatting

What It Is: Requiring AI to respond in specific formats (JSON, XML, structured text) rather than free-form responses.

Why It Works:

Eliminates parsing errors in downstream systems

Forces AI to organize information consistently

Enables automated validation and error checking

Reduces ambiguity in multi-step workflows

Example:

Respond in this exact JSON format:

{

"intent": "appointment_booking | product_inquiry | support_request",

"confidence": 0.0-1.0,

"required_info": ["list", "of", "missing", "data"],

"next_action": "specific action to take",

"escalate": true/false

}

Results: Structured outputs improve reliability by 50-70% in production AI systems (Vellum AI Research, 2024).

5. Temperature and Parameter Optimization

What It Is: Adjusting model parameters like temperature (randomness), top-p (nucleus sampling), and max tokens to control output behavior.

Parameter Guide:

Temperature 0.0-0.3: Deterministic, factual responses (customer support, data extraction)

Temperature 0.4-0.7: Balanced creativity and consistency (general conversation)

Temperature 0.8-1.0: Creative, varied responses (content generation, brainstorming)

Top-p 0.1-0.5: Focused, predictable outputs

Top-p 0.6-0.9: More diverse while maintaining quality

Business Application: For voice AI agents handling customer inquiries, temperature 0.2-0.4 with top-p 0.3-0.5 produces consistent, reliable responses while maintaining natural conversation flow (Prompt Engineering Guide, 2025).

Building Production-Ready AI Agents

Step-by-Step Implementation Framework

Phase 1: Define Clear Objectives (Week 1)

Identify Specific Use Cases:

What exact tasks should your AI agent handle?

What does success look like for each task?

What are the failure modes you must prevent?

How will you measure agent performance?

Example Objectives:

"Answer 80% of product questions without human escalation"

"Schedule appointments with 95% accuracy"

"Qualify leads with 90% agreement with the human sales team"

"Reduce customer wait time to under 30 seconds"

Phase 2: Design System Prompts (Week 1-2)

Core Components of Effective System Prompts:

1. Role Definition:

You are a professional customer service agent for [Company Name], specializing in [specific domain]. Your goal is to provide accurate, helpful information while maintaining a friendly, professional tone.

2. Knowledge Boundaries:

You have access to:

- Product catalog and pricing (updated daily)

- Company policies and procedures

- Customer account information (when provided)

- Appointment scheduling system

You do NOT have access to:

- Billing or payment processing

- Technical support for complex issues

- Authority to override company policies

3. Behavioral Guidelines:

Always:

- Verify customer information before discussing account details

- Provide specific, actionable next steps

- Offer to escalate when you're uncertain

- Maintain professional, empathetic tone

Never:

- Guess or invent information you don't have

- Make promises outside of company policy

- Share confidential business information

- Argue with customers or become defensive

4. Response Format:

Structure every response:

1. Acknowledge the customer's question/concern

2. Provide the specific information or action

3. Confirm understanding or offer next steps

4. Ask if there's anything else you can help with

Results: Clear system prompts reduce agent errors by 40-65% and improve customer satisfaction scores by 42% (OpenAI Practical Guide, 2024).

Phase 3: Implement Error Prevention (Week 2-3)

Hallucination Detection:

Before providing factual information:

1. Check: Do I have this information in my knowledge base?

2. If yes: Cite the source and provide the information

3. If no: Say "I don't have that specific information. Let me connect you with someone who can help."

4. Never guess or invent information

Confidence Scoring:

For each response, internally rate your confidence:

- High (90-100%): Proceed with response

- Medium (70-89%): Respond with caveat ("Based on available information...")

- Low (<70%): Escalate to human agent

Validation Mechanisms:

Require agents to cite sources for factual claims

Implement structured output formats for critical data

Use RAG to ground responses in verified information

Build evaluation loops to catch errors before deployment

Phase 4: Test and Iterate (Week 3-4)

Create Comprehensive Evaluation Tasks: Anthropic's research demonstrates that evaluation-driven development is critical. Generate 50-100 realistic test scenarios covering:

Common customer inquiries (60%)

Edge cases and unusual requests (25%)

Adversarial inputs designed to trigger errors (15%)

Evaluation Metrics:

Task completion rate: Did the agent complete the task?

Hallucination rate: How often did the agent invent information?

Escalation appropriateness: Did it escalate when it should (and not when it shouldn't)?

Response quality: Human evaluation of helpfulness and accuracy

Token efficiency: Cost per successful interaction

Iteration Process:

Run evaluation suite

Analyze failures and identify patterns

Refine prompts to address specific failure modes

Re-run the evaluation to measure improvement

Repeat until performance targets are met

Anthropic's internal testing showed that this iterative approach improved agent performance by 40% beyond initial "expert" implementations (Anthropic Engineering, September 2024).

Phase 5: Deploy and Monitor (Week 4+)

Gradual Rollout:

Start with 10% of traffic to test in production

Monitor performance metrics in real-time

Collect user feedback and edge cases

Expand to 25%, 50%, then 100% as confidence grows

Ongoing Optimization:

Review agent transcripts weekly for improvement opportunities

Track hallucination rates and escalation patterns

Update knowledge bases as business information changes

Refine prompts based on real-world performance data

Advanced Techniques for Voice AI Agents

Voice-Specific Prompt Engineering

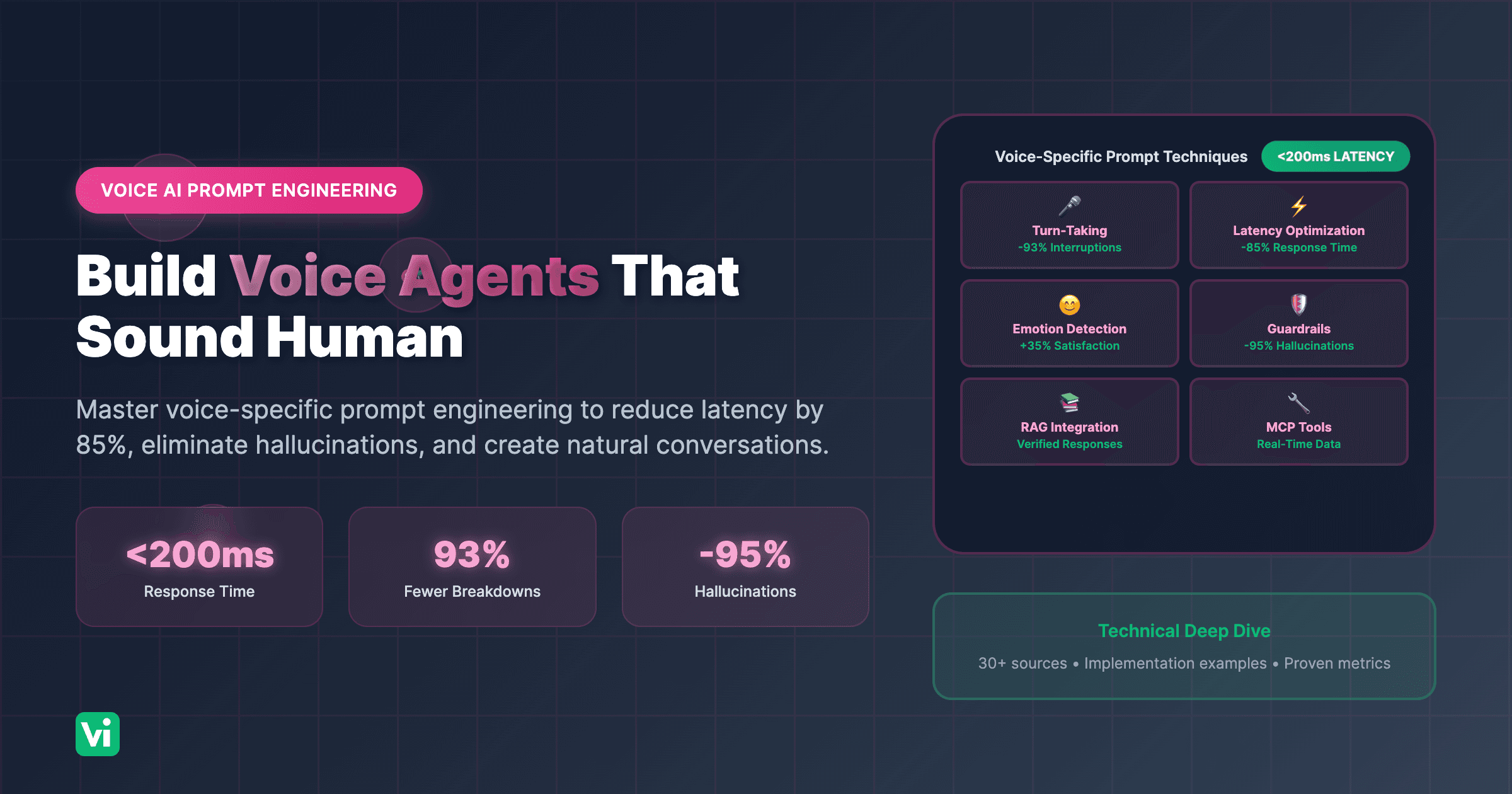

Natural Conversation Flow: Voice AI requires different prompting than text-based agents. Key considerations:

Conciseness:

Bad: "Thank you so much for calling today. I really appreciate you taking the time to reach out to us. How may I be of assistance to you this afternoon?"

Good: "Thanks for calling! How can I help you today?"

Verbal Clarity:

When providing email addresses or complex information:

- Spell out: "That's John dot Smith at company dot com"

- Confirm: "Did you get that, or should I repeat it?"

- Offer alternatives: "I can also text that to you if that's easier."

Emotion Detection and Response:

If the customer shows frustration (raised voice, negative language):

1. Acknowledge emotion: "I understand this is frustrating."

2. Take ownership: "Let me help resolve this right away."

3. Provide immediate action: "Here's what I can do..."

4. Escalate if needed: "I'd like to connect you with my supervisor, who can help further."

Results: Voice-optimized prompts improve customer satisfaction by 35% and reduce call abandonment by 40% (ElevenLabs Prompting Guide, 2024).

Multi-Language Support

Language Detection:

1. Detect customer's language from the first utterance

2. Respond in the detected language

3. If uncertain, ask: "Would you prefer English, Spanish, or another language?"

4. Maintain language consistency throughout the conversation

Cultural Adaptation:

Adjust formality and communication style based on:

- Language (formal vs. casual cultures)

- Time of day (morning/evening greetings)

- Regional preferences (American vs. British English)

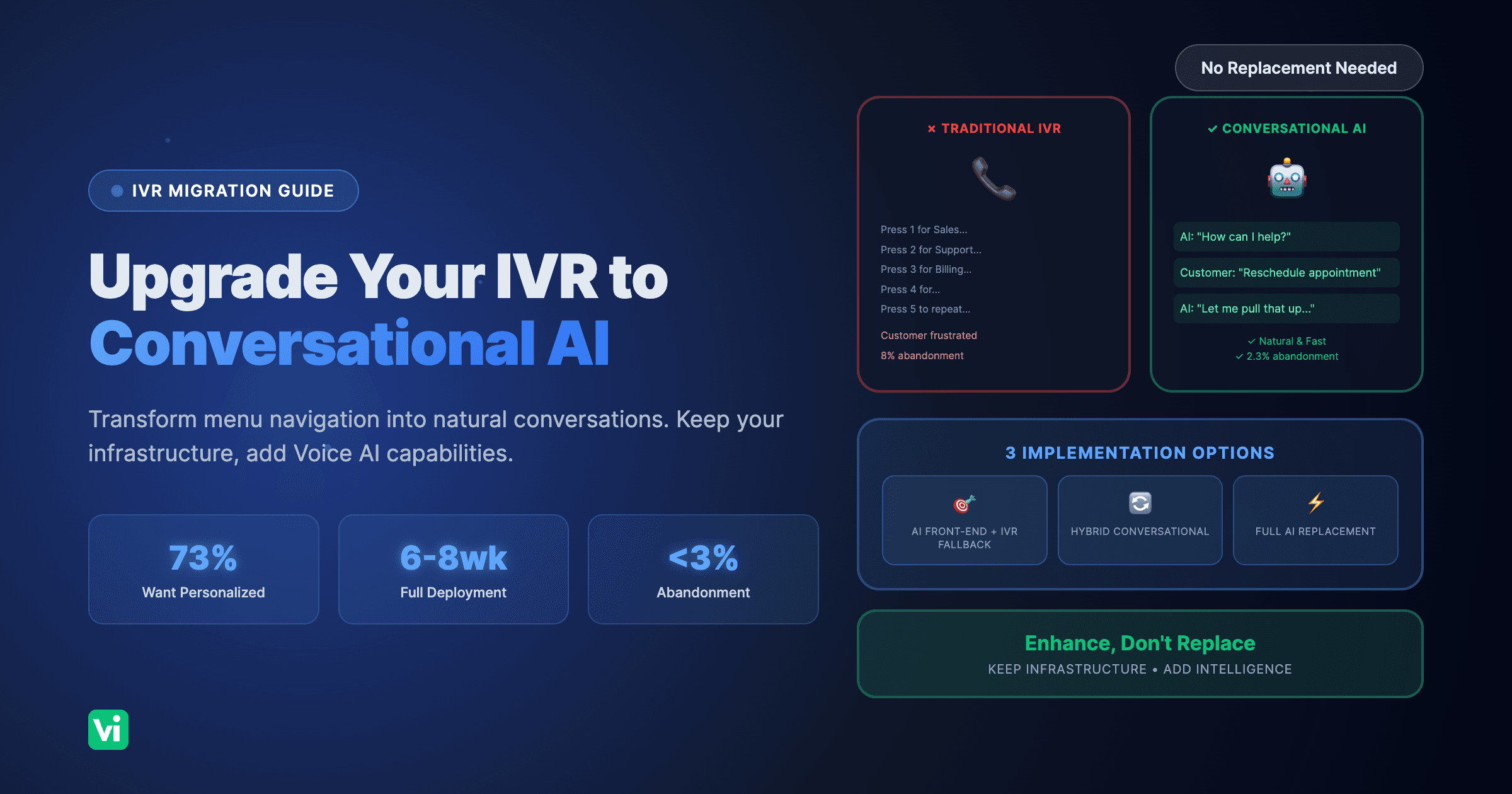

VoiceInfra supports 30+ languages with native-quality voices, enabling global customer service without language barriers.

Integration with Business Systems

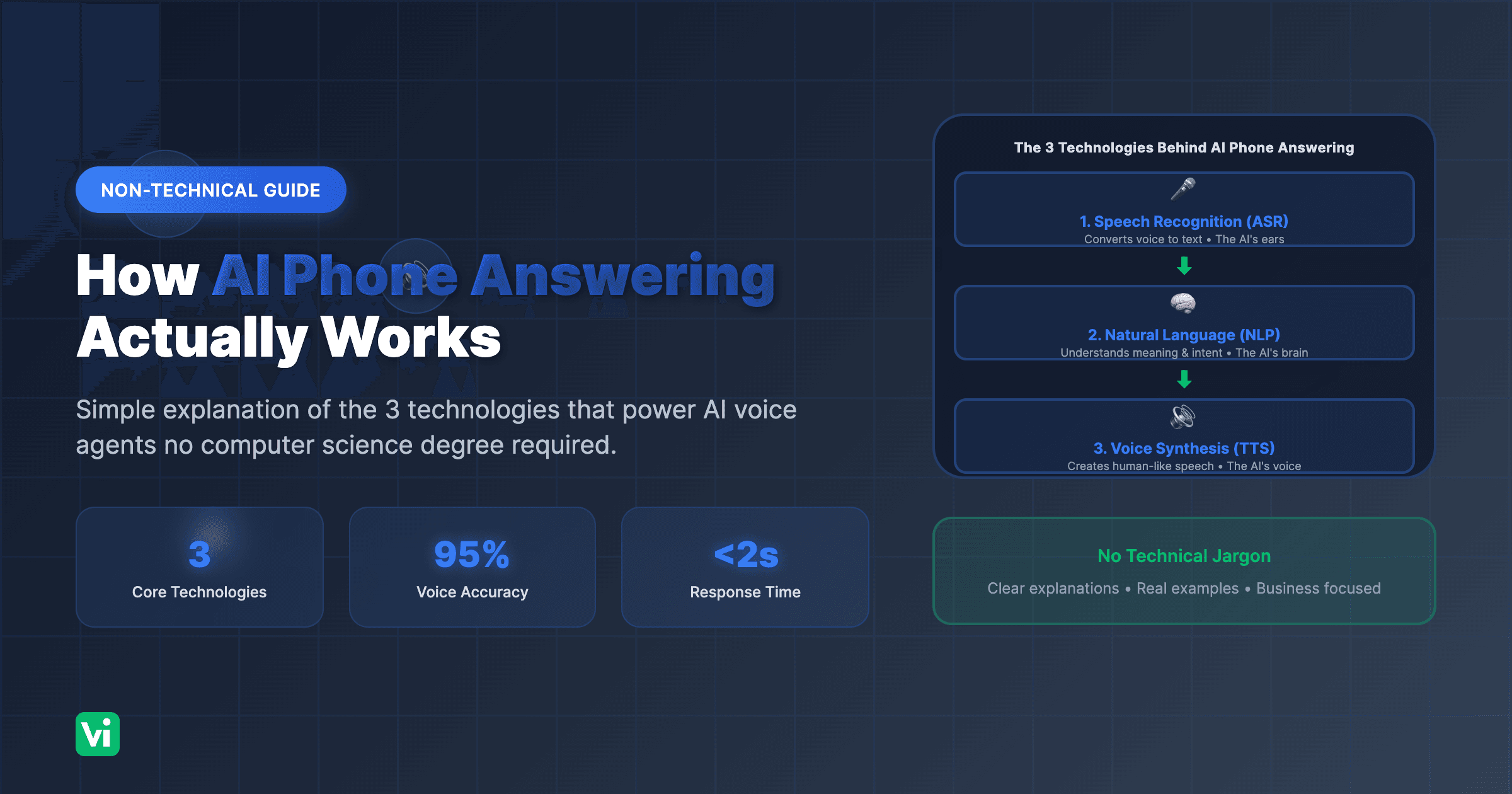

Real-Time Data Access: Modern AI agents need to access live business data during conversations. VoiceInfra's Model Context Protocol (MCP) integration enables:

CRM lookups: Access customer history and preferences

Inventory checks: Provide real-time product availability

Appointment scheduling: Check calendars and book appointments

Order status: Track shipments and delivery information

Payment processing: Securely handle transactions

Function Calling Best Practices:

When calling external functions:

1. Validate inputs before making the call

2. Handle errors gracefully ("I'm having trouble accessing that information right now")

3. Provide context to users ("Let me check our system...")

4. Confirm results ("I see you have an appointment scheduled for...")

Real-World Results: What Proper Prompt Engineering Delivers

Healthcare: 42% Reduction in No-Shows

A healthcare provider implemented AI voice agents with optimized prompts for appointment reminders and scheduling. Results:

67% improvement in appointment adherence

42% reduction in no-show rates

35% increase in scheduling efficiency

28% reduction in administrative workload

Key Prompt Engineering Elements:

Clear, empathetic communication style

Structured appointment confirmation process

Multiple reminder touchpoints

Easy rescheduling options

E-Commerce: 85% Lead Qualification Accuracy

An online retailer deployed AI agents for lead qualification with carefully engineered prompts. Results:

85% improvement in lead qualification accuracy

40% increase in lead conversion rates

60% reduction in time-to-contact

24/7 sales coverage without additional staff

Key Prompt Engineering Elements:

Structured qualification questions

Budget and timeline discovery

Product recommendation logic

Seamless handoff to human sales team

Insurance: 96% First-Time Completion Rate

An insurance company automated First Notice of Loss (FNOL) collection with RAG-powered AI agents. Results:

96% first-time completion rate for FNOL

45% reduction in claims processing time

67% improvement in document submission rates

4.7/5 customer satisfaction rating

Key Prompt Engineering Elements:

Step-by-step information collection

Validation of required fields

Empathetic tone for stressful situations

Clear next-step communication

Common Prompt Engineering Mistakes (And How to Fix Them)

Mistake 1: Overly Complex Instructions

Problem: Trying to cram every possible scenario into a single massive prompt creates confusion and reduces performance.

Solution: Break complex instructions into modular components. Use conditional logic and separate prompts for different scenarios.

Bad:

"Handle customer inquiries about products, pricing, returns, shipping, technical support, account issues, and billing, making sure to check inventory, validate customer information, offer alternatives if products are unavailable, explain our 30-day return policy unless it's a final sale item in which case explain that, and..."

Good:

"Identify the customer's primary need:

- Product inquiry → Use product_info prompt

- Return request → Use return_process prompt

- Technical issue → Use tech_support prompt

- Billing question → Escalate to the billing team."

Mistake 2: No Failure Handling

Problem: Prompts that don't account for what happens when the AI doesn't know something or encounters an error.

Solution: Explicitly define failure modes and escalation paths.

Add to every system prompt:

"If you don't have the information needed to answer accurately:

1. Never guess or invent information

2. Say: 'I don't have that specific information available'

3. Offer alternative: 'I can connect you with [specialist] who can help'

4. Escalate to the appropriate human agent with context."

Mistake 3: Ignoring Token Economics

Problem: Inefficient prompts that waste tokens on unnecessary verbosity or redundant information.

Solution: Optimize for token efficiency while maintaining clarity.

Inefficient (127 tokens):

"I want to take a moment to express my sincere gratitude for your patience while I look into this matter for you. I understand that your time is valuable, and I truly appreciate you giving me the opportunity to assist you with your inquiry today. Let me go ahead and check our system to see what information I can find regarding your question."

Efficient (23 tokens):

"Thanks for your patience. Let me check our system for that information."

Impact: Token optimization can reduce AI costs by 60-70% while maintaining or improving response quality.

Mistake 4: Static Prompts That Never Evolve

Problem: Setting prompts once and never updating them based on real-world performance.

Solution: Implement continuous improvement cycles:

Monitor agent performance weekly

Analyze failure patterns and edge cases

Update prompts to address specific issues

A/B test prompt variations

Measure impact and iterate

Companies that treat prompt optimization as an ongoing process achieve sustained performance improvements of 40%+ over time (Medium Research on Prompt Engineering, 2025).

Frequently Asked Questions About Prompt Engineering

How long does it take to properly engineer prompts for an AI agent?

Initial prompt development typically takes 1-2 weeks for basic implementations, with 2-4 weeks for complex, multi-function agents. However, prompt engineering is an ongoing process. The most successful deployments dedicate time each week to reviewing performance and refining prompts based on real-world usage. Anthropic's research shows that iterative refinement can improve performance by 40% beyond initial expert implementations.

Can prompt engineering really reduce AI hallucinations?

Yes, significantly. Research shows that proper prompt engineering techniques, including RAG integration, structured outputs, confidence scoring, and clear instructions, can reduce hallucination rates from 27% (baseline) to under 5%. The key is combining multiple techniques: grounding responses in verified data (RAG), requiring agents to cite sources, implementing confidence thresholds, and explicitly instructing agents never to guess or invent information.

What's the difference between system prompts and user prompts?

System prompts define the AI agent's role, knowledge boundaries, behavioral guidelines, and response formats. Developers set them and remain consistent across interactions. User prompts are the specific inputs from customers or users during each conversation. Effective AI agents require well-engineered system prompts that provide a foundation for reliably handling diverse user prompts.

How do I know if my prompts are working?

Measure these key metrics:

Task completion rate: Percentage of interactions completed without human intervention

Hallucination rate: Frequency of invented or incorrect information

Escalation appropriateness: Whether agents escalate when they should (and don't when they shouldn't)

Customer satisfaction: User ratings and feedback

Token efficiency: Cost per successful interaction

Response consistency: Variation in responses to similar queries

Run regular evaluations with 50-100 test scenarios covering common cases, edge cases, and adversarial inputs.

Should I use different prompts for different AI models?

Yes. Different models have different strengths, weaknesses, and optimal prompting styles. For example:

GPT-4o: Excels with detailed, structured instructions

Claude Sonnet: Strong with complex reasoning and nuanced tasks

Gemini: Optimized for multilingual and multimodal tasks

Groq: Best for speed-critical applications with simpler prompts

VoiceInfra provides access to 5+ LLM providers, allowing you to select the optimal model for each use case and engineer prompts accordingly.

Can I use the same prompts for voice and text AI agents?

No. Voice AI requires specific adaptations:

Conciseness: Shorter responses that don't overwhelm listeners

Verbal clarity: Spelling out complex information (emails, addresses)

Natural pacing: Conversational flow with appropriate pauses

Emotion awareness: Detecting and responding to vocal cues

Confirmation loops: Verifying understanding in real-time

Voice-optimized prompts improve customer satisfaction by 35% compared to text-based prompts adapted for voice (ElevenLabs Research, 2024).

How does VoiceInfra help with prompt engineering?

VoiceInfra provides enterprise-grade infrastructure that makes prompt engineering more effective:

Multi-provider LLM access: Test and optimize prompts across OpenAI, Anthropic, Gemini, and Groq

RAG-powered knowledge bases: Upload documents and crawl websites to ground agent responses in verified information

Model Context Protocol (MCP): Enable real-time function calling and API integrations

Comprehensive analytics: Track performance metrics to identify prompt improvement opportunities

Workflow templates: Pre-built prompt structures for everyday use cases

The Future of AI Agents Depends on Prompt Engineering

The businesses that thrive with AI won't be those with the biggest models or the most data; they'll be those that master the art and science of prompt engineering.

The underlying technology doesn't determine your AI agent's reliability. It's defined by how well you engineer the prompts that guide it.

Proper prompt engineering transforms unreliable automation into a dependable business asset. It reduces hallucinations, improves task completion, optimizes costs, and delivers consistent customer experiences that build trust and drive revenue.

Ready to build AI agents that actually work?

Get started with VoiceInfra:https://voiceinfra.ai/sales

VoiceInfra provides enterprise-grade voice AI infrastructure with multi-provider LLM access (OpenAI, Anthropic, Gemini, Groq), RAG-powered knowledge bases, Model Context Protocol integration, and comprehensive analytics. Build reliable AI agents with optimized prompts, deploy in 60 seconds, and scale with confidence. Transform your customer communication with AI that actually works.